If you’ve ever deployed a serverless application using AWS Lambda and noticed frustrating delays or Gateway Timeout (504) errors, you’re not alone. Recently, I encountered an issue where my Lambda functions were hitting a cold start init timeout, resulting in failed executions and poor user experience. Diving deeper into the logs revealed the infamous INIT_REPORT Init Duration values, showing unusually long initialization times. This story is all about what I discovered, why it was costly, and the measures I took with memory tuning and provisioned concurrency to fix it.

TL;DR

My AWS Lambda was encountering cold starts that exceeded the init timeout, leading to 504 Gateway Timeout errors. Analysis showed high init durations in the logs, mostly due to large dependencies and under-provisioned memory. By increasing the function memory and using Provisioned Concurrency, I significantly reduced cold start times and restored performance. Monitoring and fine-tuning both deployment and initialization are essential when using Lambda for latency-sensitive applications.

Understanding the Problem: INIT_REPORT and Gateway Timeout

In AWS CloudWatch Logs, Lambda functions output several phases of their execution lifecycle. One of them is the INIT phase, during which AWS prepares your function — this includes:

- Downloading the code package

- Spinning up the runtime environment

- Loading dependencies and initializing resources

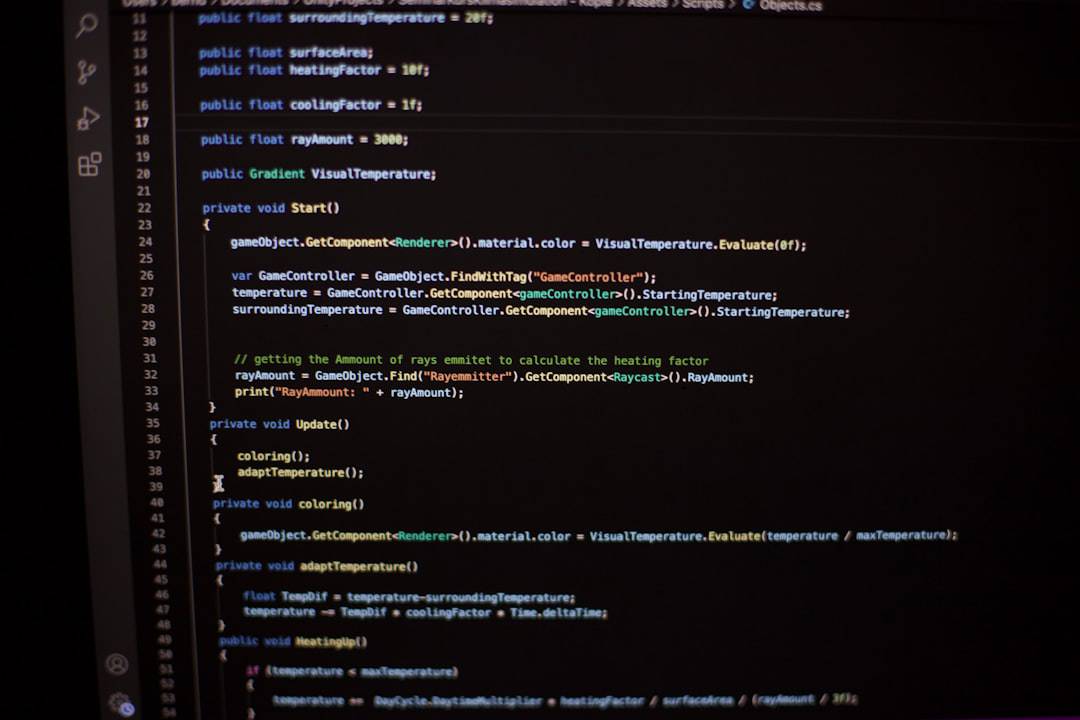

You may come across a message like this in CloudWatch logs:

INIT_START Runtime Version: nodejs18.x... INIT_REPORT Init Duration: 9200.50 ms

Now, 9200 milliseconds (or 9.2 seconds!) just for the initialization? That’s an issue, especially if you’re deploying behind an API Gateway or ALB, both of which default to a timeout of 30 seconds. Throw in some actual execution time, and you’ll find yourself looking at the dreaded:

504 Gateway Timeout

This was happening during what AWS calls a “cold start”, where a new Lambda container instance has to be initialized to handle the invocation. In the warm start case, this problem doesn’t appear because all the initialization is already done.

What Caused My Long Init Duration?

I began investigating what was increasing the init duration so drastically. My Lambda function was relatively straightforward — a Node.js function that acted as a lightweight API handler. But when checking the code bundle size, dependencies, and execution flow, I discovered several culprits:

- Large NPM dependencies included even when unused in some branches of logic

- Multiple SDK initializations for things like AWS SDK clients, analytics, and logs

- Memory allocation too low: my function was only set with 128 MB, resulting in a slower CPU

Here’s a deeper look at how I traced the cause. First, I enabled detailed logs and X-Ray traces. From the X-Ray service map and segment trace, I could identify how long the Initialization phase was taking per function instance. Combine that with CloudWatch Logs giving INIT_START and INIT_REPORT entries, and I had a clear picture.

The metrics were undeniable: cold start init durations were consistently above 8 seconds, sometimes even reaching 12 seconds. Even a handful of such occurrences, especially in spikes of traffic, could severely impact user experience.

Steps I Took to Fix the Cold Start Problem

Now came the hard part: mitigation. I made use of a combination of strategies. Below are the key changes I made that showed real results:

1. Increased Memory Allocation

One of the little-known secrets in AWS Lambda is that increasing memory allocation doesn’t just give more memory; it also improves the CPU allocation proportionally. At the default 128 MB, my Lambda had very little compute power. After bumping this up to 512 MB, the INIT duration immediately dropped by ~40%.

Nice bonus: It didn’t significantly affect my bill, because Lambdas bill by millisecond of execution and allocated memory. Since each invocation was now faster, overall costs were balanced or slightly improved.

2. Reduced Package Size and Lazy Initialization

I reviewed my deployment package and slimmed it down. I switched to using ESModules where applicable, checked for bundle tree-shaking capabilities, and moved large dependency imports inside function blocks (instead of at the top).

Also, I used lazy-loading for services that weren’t needed for every invocation — like analytics or third-party HTTP clients. That helped shorten the INIT phase drastically.

3. Enabled Provisioned Concurrency

This change made the largest difference. AWS offers Provisioned Concurrency, which ensures that a set number of Lambda instances are always initialized and ready to handle requests instantly — completely avoiding cold starts.

Provisioned Concurrency can be added during:

- Lambda function alias deployments

- Infrastructure-as-Code (like Serverless Framework, SAM, or Terraform)

- Manually from the AWS Console

I configured a start setting of 5 provisioned instances to handle peak traffic times, especially during early mornings when user activity spiked. This approach works exceptionally well for predictable workloads with regular usage patterns.

Even though there’s a cost associated with provisioned concurrency (billed separately), the benefit of ultra-low latency and no timeouts for users made it worth the investment.

4. Set Function Timeout Wisely

While the 504 Gateway Timeout wasn’t fixable by changing the Lambda’s timeout limit itself (since API Gateway has a lower ceiling of 29s), I still made sure Lambda’s own timeout was set correctly (set to 25s) to keep execution safely within API Gateway’s cap.

Post-Fix Observations and Learnings

After implementing these fixes, here were the key observations:

- Cold starts dropped below 1.8s, and most warm starts were <60ms

- No more INIT_TIMEOUT log reports

- Zero user-reported 504 errors

- Provisioned concurrency kept latency consistent during traffic spikes

From a DevOps perspective, charts in AWS CloudWatch and dashboards in Datadog showed smoother curves and fewer anomalies. API health improved dramatically, and I no longer worried about first-invocation penalties.

Tips for Avoiding INIT Time Pitfalls

Here are some generalized recommendations for anyone facing similar issues:

- Monitor INIT durations: Track

INIT_REPORTlogs and set CloudWatch alarms. - Optimize cold start performance: Use smaller bundles, faster languages, and avoid global allocations.

- Use provisioned concurrency: Especially for latency-sensitive functions behind a frontend or gateway.

- Allocate enough memory: More RAM equals faster cold starts — don’t skimp!

- Prefer ARM/Graviton if possible: In some cases, running on Graviton-based Lambda functions can reduce costs and improve performance.

Conclusion

AWS Lambda is a powerful and scalable compute platform, but its cold start behavior can trip up even experienced developers. Understanding the meaning behind INIT_REPORT Init Duration, analyzing memory and init behavior, and taking advantage of tools like provisioned concurrency can make a substantial difference.

If you’re using Lambda in a production-grade, user-facing application — invest the time to identify where your cold starts are coming from. The sooner you fix those INIT time bottlenecks, the more reliable and responsive your services become.

The best time to optimize your serverless functions was yesterday. The second-best time is now.